Software developers

Debug smarter, innovate faster. We know developers do not want to spend all their time debugging and troubleshooting. They want to create. Moreover, the pressure to log less can be frustrating. Now, you can access high-value logs when needed without fear of exceeding observability spending.

Eliminate firefighting

Software development teams have more responsibilities than ever. The last thing your engineers need is more issues in their queue. A recent report stated that over 50% of developers use telemetry data daily for debugging and troubleshooting. Equip your development, support, and Operations teams to become better problem solvers. By employing a telemetry pipeline, they’ll get access to the right data and insights to solve problems faster than ever before.

Root cause in minutes, not hours

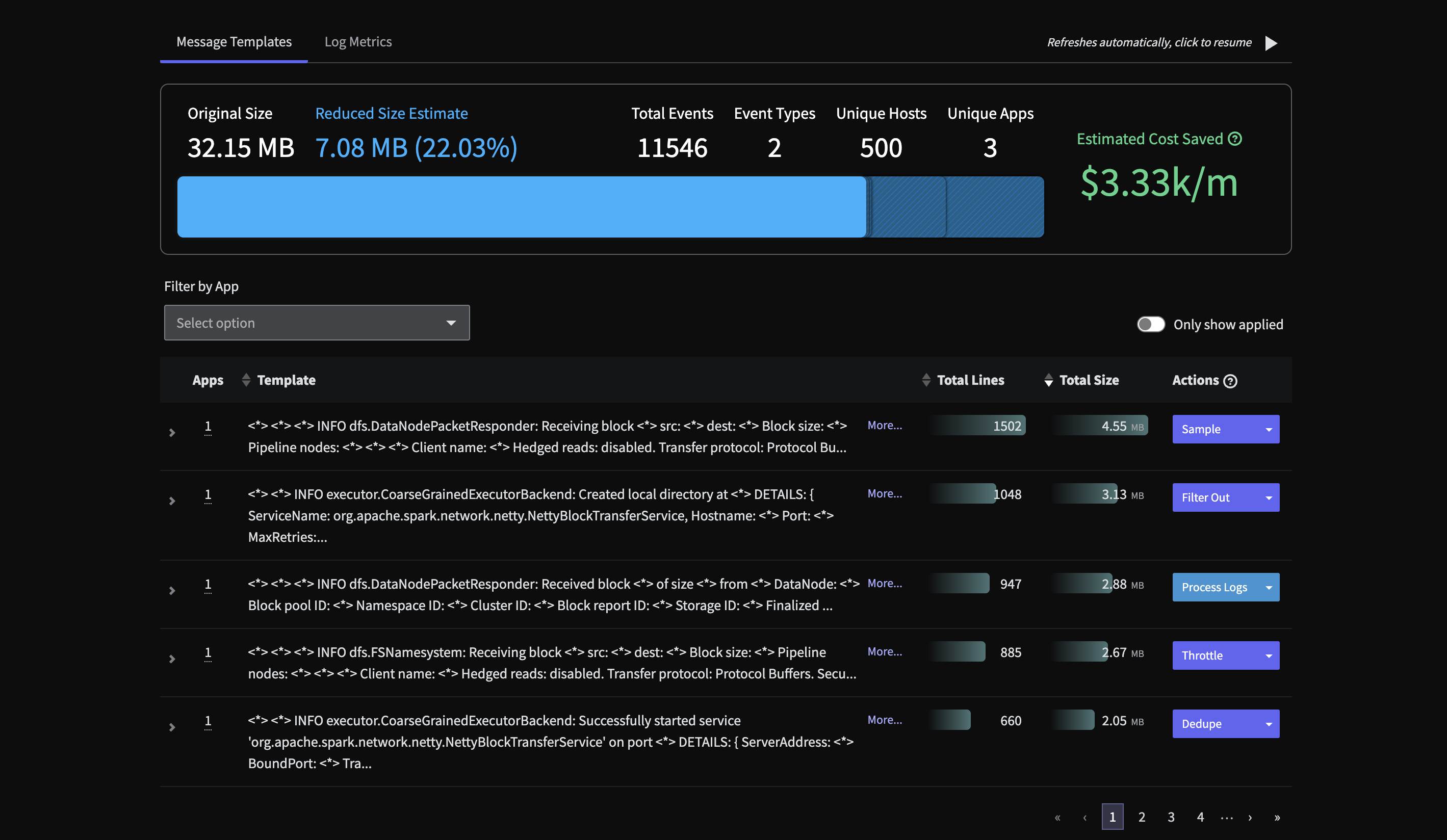

Enhance observability by amplifying the "signal to noise ratio" of your telemetry data and routing it to the relevant. Aligning data formats across platforms enhances interoperability and root cause identification, while data enrichment deepens problem comprehension. With a collaborative team armed with superior data, issue resolution becomes more effective.

Identify specific values, such as response time, to create metrics. Mezmo's Events to Metrics Processor provides an easy way to create a new metric event within the pipeline, typically from an existing log message. The new metric event can use data from the log to generate the metric, including the desired value.

Our Active Telemetry Platform expands the usability of your telemetry data. Pipeline gets it in the right format, routes it to every team that needs it, and integrates it with your current analytics tools. Data enrichment adds additional context for easier troubleshooting. This boosts your team's ability to efficiently manage application performance, service reliability, and security, leading to increased effectiveness across the board.

Smarter data, better observability

Telemetry Pipeline is the real-time engine behind Mezmo's Active Telemetry approach. It sits between your telemetry sources and your observability platforms—giving you full control over what gets ingested, where it goes, and how it behaves.

Enable developers to self-serve while maintaining centralized governance

Intelligently direct telemetry to appropriate destinations in real-time

Process, enrich, and transform data before storage or indexing

Key capabilities

Remove low-value logs (DEBUG/INFO) to reduce noise and manage costs effectively. Learn more about supported processors.

Decide what stays hot, what moves to cold storage (S3, Azure Blob, GCP), or gets dropped. Learn more about supported destinations.

Stream telemetry data in real-time and replay buffered events for instant incident investigation without waiting for indexing or storage delays.

Continuously analyze telemetry patterns to identify high-volume, low-value data streams and provide actionable recommendations for cost optimization. Learn how to use data profiling.

Automatically adapt pipeline behavior based on real-time conditions, scaling processing capacity and adjusting sampling rates to maintain performance during traffic spikes.

Enhance telemetry data with contextual metadata from external sources, standardize formats, and add business context to improve observability and enable better analysis.

Monitor and control metric cardinality in real-time to prevent exponential cost increases from high-cardinality tags while preserving essential dimensional data.

Real results from real teams

reduction

"Mezmo helped us reduce our overall telemetry data volume by 50% by filtering and parsing data to ensure we only indexed the fields we actually needed."

— Netlink Voice

"Teams using Mezmo consistently reduce mean time to resolution by 30-50% through cleaner, more relevant data feeding into their observability platform."

— Platform Engineering Team

"Using Data Profiler, we discovered we were sending massive amounts of verbose health check logs. Mezmo helped us create cost metrics and optimize our pipeline."

— Schier Engineering

Stop paying to store noise

Start capturing signals

- ✔ Schedule a 30-minute session

- ✔ No commitment required

- ✔ Free trial available